#Logstash injest filebeats output windows

It does Windows Event Logs nicely as well. We continue to use logback for our app logging, but now Sumo collects pretty much all our logs now as well as graphite data. While slurping text logs is what they are geared for, pushing logs and metrics is also an option. Data ingestion is incredibly flexible- I have not hit any limitations with what it can do, but I did give up trying to ingest directly from logstash as getting metadata to map was more trouble than it was worth. That can be time consuming but as far as cookbooks go I’d say it’s medium complexity. Also, there is a very well-developed chef cookbook so installation and configuration is very manageable once you get your ingestion configured along with rulesets. It’s comprehensive as it is an entire stack. It’s easy to set up alarms, integrate with RESTful APIs for tools like like Slack, perform complex data correlation, and make purdy dashboards for management.

I found statistical capabilities similar as well, but Kibana does seem to be more actuarially adherent. As you might have guessed, I just completed a project where I moved from ELK to Sumo for production log data and metrics visualization and it is a world of difference from Kibana as far as how much easier it is to get useful output. It offers most of what I remember that Splunk does without being prohibitively expensive. Then you can focus on just feature configuration without having to maintain the core service.

It’s important to know right right off that Sumo is a cloud solution, so as long as you’re okay with shipping your logs and metrics over HTTPS to their servers, it’s a good way to go. I’m just a DevOps guy who hates dealing with crappy and incomplete log collection.Īfter dealing with the complexity of ELK, which is admittedly is very powerful and flexible so I have read, ElasticSearch is not for the faint of heart, especially when you walk into a shop and their ELK stack is 4 years old. Let me also say am not a shill for this company. I also tried Logly and LogDNA which were nice but not as powerful and feature rich as Sumo. Next copy the log file to the C:/elk folder.I am not sure if you are open to commercial solutions, but Sumo Logic is an excellent ingest-type solution. The hosts specifies the Logstash server and the port on which Logstash is configured to listen for incoming Beats connections. We are specifying the logs location for the filebeat to read from. Open filebeat.yml and add the following content. # Sending properly parsed log events to elasticsearch #If log line contains tab character followed by 'at' then we will tag that entry as stacktrace # Read input from filebeat by listening to port 5044 on which filebeat will send the data

#Logstash injest filebeats output generator

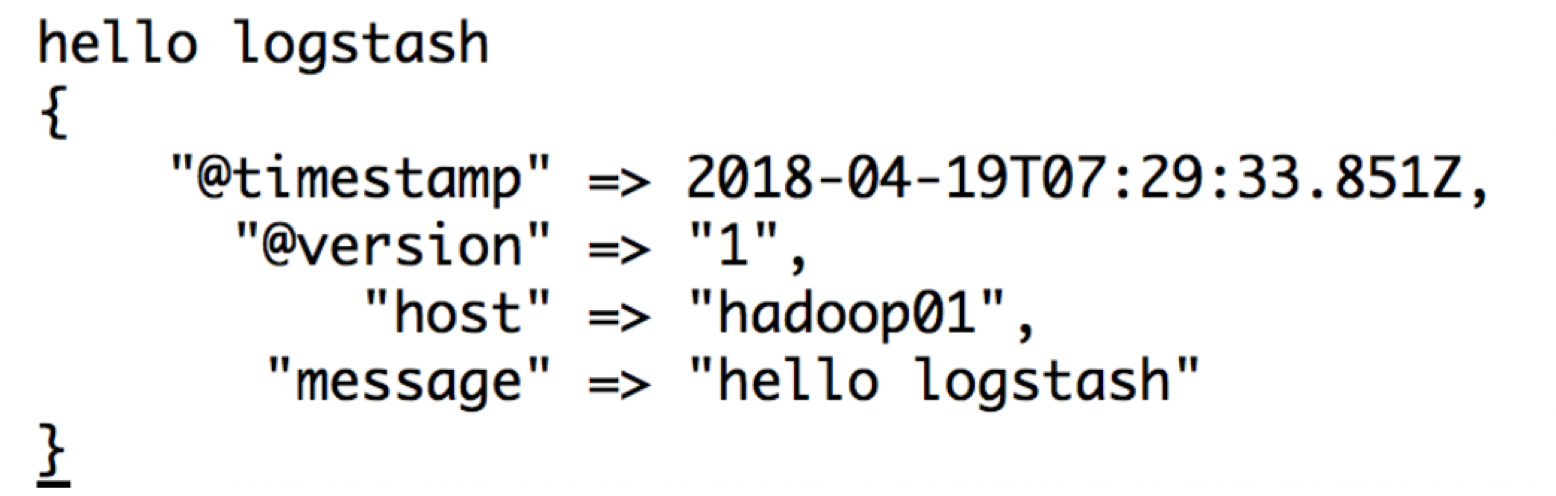

Online Grok Pattern Generator Tool for creating, testing and dubugging grok patterns required for logstash. Here Logstash is configured to listen for incoming Beats connections on port 5044.Īlso on getting some input, Logstash will filter the input and index it to elasticsearch. Similar to how we did in the Spring Boot + ELK tutorial,Ĭreate a configuration file named nf.

Logstash itself makes use of grok filter to achieve this. This data manipualation of unstructured data to structured is done by Logstash. Suchĭata can then be later used for analysis. We first need to break the data into structured format and then ingest it to elasticsearch. When using the ELK stack we are ingesting the data to elasticsearch, the data is initially unstructured. kibana UI can then be accessed at localhost:5601ĭownload the latest version of logstash from Logstash downloads Run the kibana.bat using the command prompt. Modify the kibana.yml to point to the elasticsearch instance. Elasticsearch can then be accessed at localhost:9200ĭownload the latest version of kibana from Kibana downloads Run the elasticsearch.bat using the command prompt. This tutorial is explained in the below Youtube Video.ĭownload the latest version of elasticsearch from Elasticsearch downloads

0 kommentar(er)

0 kommentar(er)